What is a Large Language Model (LLM)

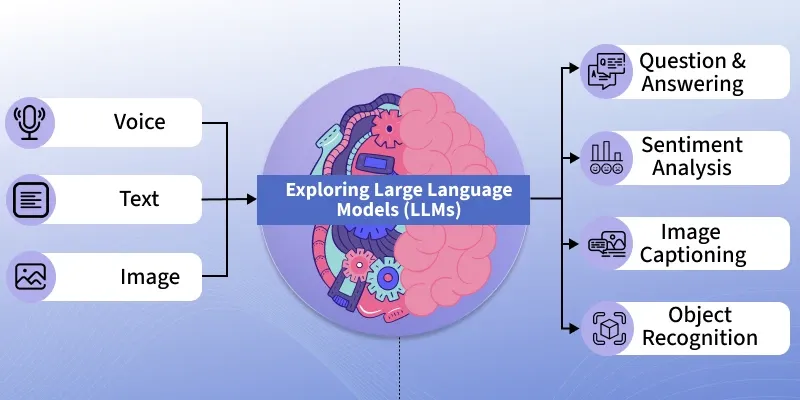

Large Language Models (LLMs) are advanced AI systems built on deep neural networks designed to process, understand and generate human-like text. By using massive datasets and billions of parameters, LLMs have transformed the way humans interact with technology. It learns patterns, grammar and context from text and can answer questions, write content, translate languages and many more. Mordern LLMs include ChatGPT (OpenAI), Google Gemini, Anthropic Claude, etc

Working of LLM

LLMs are primarily based on the Transformer architecture which enables them to learn long-range dependencies and contextual meaning in text. At a high level, they work through:

Input Embeddings: Converting text into numerical vectors.

Positional Encoding: Adding sequence/order information.

Self-Attention: Understanding relationships between words in context.

Feed-Forward Layers: Capturing complex patterns.

Decoding: Generating responses step-by-step.

Multi-Head Attention: Parallel reasoning over multiple relationships.